Automated vehicle technology was supposed to end car crashes forever — but a new study suggests that the more autonomous a car gets, the more reckless its driver may become, negating many of the technology's safety benefits to vulnerable road users when "smart" cars make mistakes.

A recent study by the Insurance Institute of Highway Safety and MIT revealed that drivers started showing troubling signs of "disengagement" when they got too comfortable with the automated systems.

In the study, a group of drivers were given vehicles equipped with cutting-edge driver assistance features — and researchers were able to watch on camera as behavior changed as the drivers got used to their "smart" new cars over the course of a month.

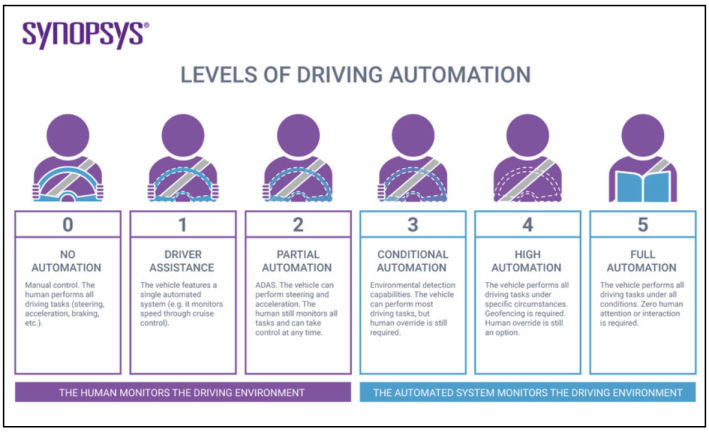

Half of the group was given "Level One" automated vehicles equipped with just one advanced safety feature: a fancy version of cruise control that maintains a pre-set speed and distance between cars until the driver taps the brakes. The other half was given "Level Two" vehicles equipped with both advanced cruise control and "pilot assist" technology, which automatically kept their cars from swerving from the center of the lane, even if the driver stops steering.

(And for the record, that's about as automated as AVs get these days; vehicles automated at levels three and above weren't even approved for commercial sale until about a week ago in Japan, and they won't be available in the U.S. for the foreseeable future.)

At first, all drivers in the study drove pretty much as safely as they normally would when operating their cars without the assistance of a computer. But as the experiment continued (and, ostensibly, as the drivers began to forget that cameras were watching their every move), both groups began demonstrating "driver disengagement," including letting their eyes slip from the road, glancing at their cell phones, or taking one hand off the wheel — three behaviors that have been correlated with a higher risk of crashing into a vulnerable road user or another driver.

And time went on, the drivers with the "smarter" cars started doing some seriously dumb and dangerous things. The group who relied on "pilot assist" technology were more than twice as likely as those with cruise control alone to let their attention slip from the dangerous task of piloting a multi-ton machine capable of killing its driver and other road users — and they were twelve times more likely than the drivers of manually-operated cars to take both hands off the wheel, often for long periods of time.

That's exactly what Rafaela Vasquez, the human driver of a "driverless" Uber, was doing when she killed Arizona pedestrian Elaine Herzberg in 2018. Because most automated vehicles still aren't advanced enough to recognize human bodies outside of clearly visible crosswalks — a pretty serious oversight in an auto-dominated country where it's often legally acceptable for cities not to even mark crosswalks with paint — Vasquez was supposed to override the e-taxi company's automated driving features and avoid Herzberg, who was attempting to push her bicycle to a ramp on the pedestrian island immediately across the road from where she stood.

Instead, Vasquez had both hands on her cell phone, which was playing an episode of The Voice at the time of the crash — and because Uber had disabled the car's emergency driver notification features, she didn't even look up until it was too late to save Herzberg's life. Uber faced no charges for its role in Herzberg's death; Vasquez is currently awaiting trial for negligent homicide.

Luckily, none of the drivers involved in the study were involved in a crash over the course of the study period, but Herzberg isn't the only American who died because a driver overestimated the power of vehicle automation.

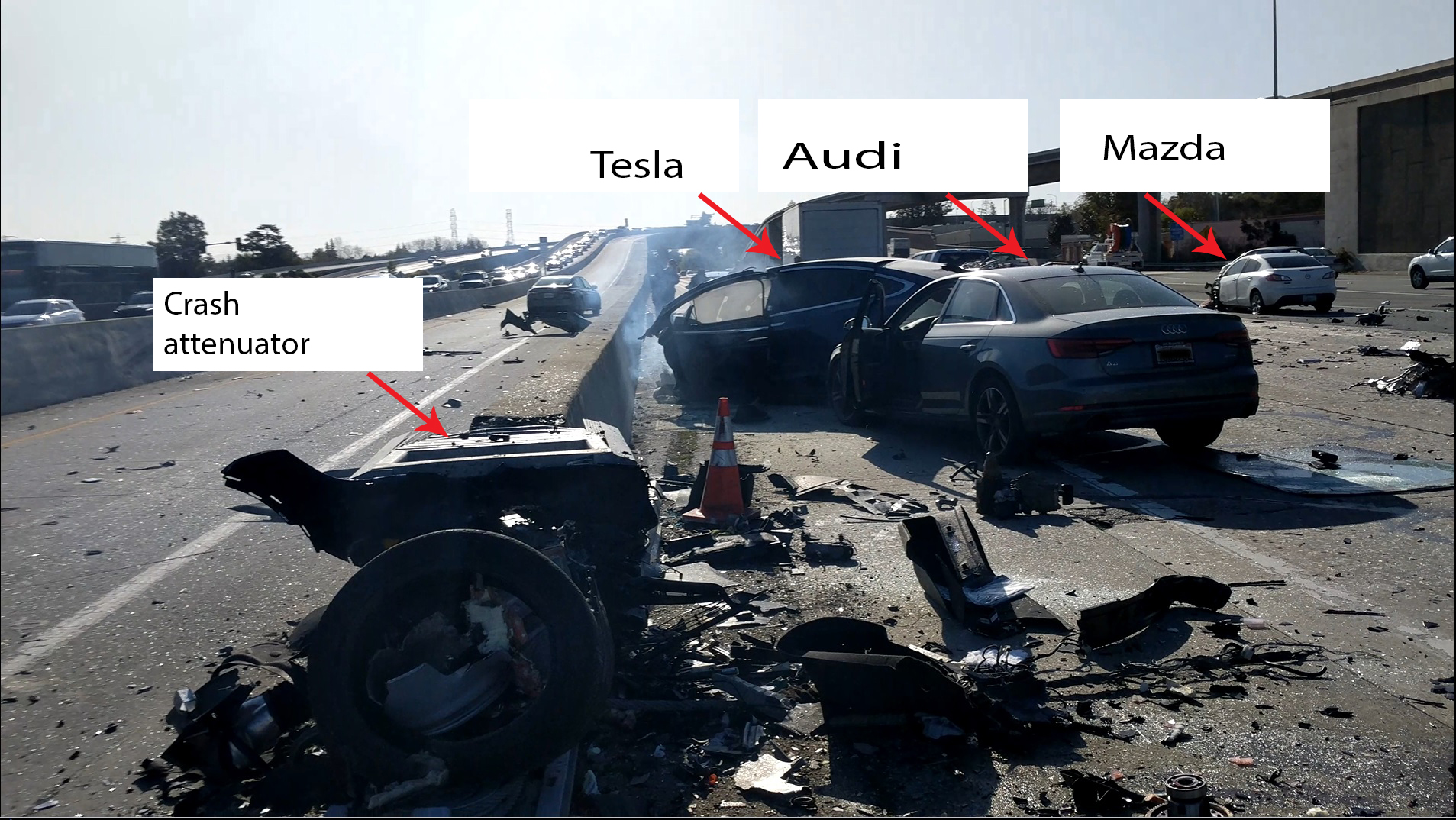

In 2018, for instance, California driver Walter Huang was killed when the "autopilot" system on his Tesla ignored a faded but accurate lane marking, and instead followed a darker line on the pavement that steered him straight through a damaged guardrail, and then into the path of two other drivers, one of whom was injured. Huang was playing a game on his cell phone at the time of the crash, despite the fact that he reportedly travelled past the deceptive lane marking frequently on his daily commute and had complained to family that the car's autopilot system behaved erratically at that location in the past; he made no attempt to brake or steer his vehicle, which was traveling at 75 miles per hour.

In a study of the crash, analysts at the National Transportation Safety Board concluded that "driver distraction" was the number one contributor to Huang's death, followed closely by a lack of onboard technology aimed at "risk mitigation pertaining to monitoring driver engagement" and "insufficient federal oversight of partial driving automation systems." The Massachusetts researchers came to a similar conclusion in their own study — and suggested that until AV manufacturers can develop the tech necessary to monitor human drivers for signs of dangerous "automation complacency," they probably shouldn't be allowed on our roads.

“Crash investigators have identified driver disengagement as a major factor in every probe of fatal crashes involving partial automation we’ve seen,” said Ian Reagan, who lead the study. "This study supports our call for more robust ways of ensuring the driver is looking at the road and ready to take the wheel when using Level Two systems...[rather than] getting lulled into a false sense of security over time.”

Of course, autonomous vehicle technology is far from the only thing that makes drivers complacent about the safety of themselves and others behind the wheel — because virtually everything about our legal system, our roadway designs, and our culture at large signals to drivers that car crashes are "accidents" that can't always be prevented. (Even though they totally can.) But at the absolute least, automated vehicle technology should be treated as our last line of the defense in a comprehensive effort to end traffic violence rooted in reducing vehicle miles traveled — and not a crutch that drivers can lean on when they're too just too distracted, tired, or irresponsible to watch the road themselves.